Your website may host some of the website’s finest content. Your pages could be the most engaging and informative sources of information for your target audience. It won’t matter much if Google is unable to understand what your website represents. It’s for this reason that it’s vital that you care for search engine crawlers.

Although it’s essential that your content is optimized to ensure that it gains the search engine ranking position (SERP) that it deserves, this doesn’t mean that you have to remove your existing articles and start from scratch.

By making the right adaptations, it’s possible to convert your articles, posts, and even videos to stretch further to accommodate higher positions on Google’s results pages.

How do ‘Crawlers’ Work?

So, what does it mean when the term ‘crawlers’ is discussed? In a nutshell, search engine crawlers browse pages throughout the internet to better understand and categorize websites for Google and other similar engines.

Often referred to as bots, crawlers can index the pages of a website and determine its primary category while also indexing its pages to ensure that there’s sufficient credibility to be placed highly on its ranking pages.

Although this process may seem fully automated and straightforward, Google has proven time and again that it’s a fickle search engine that often alters how it categorizes websites. For example, Google’s Panda update saw a greater emphasis placed on the credibility of domain names based on industry relevance and accessibility.

Considering the wide range of factors that go into categorizing a website and analyzing its credibility, it’s certainly worth working to create great content accordingly.

To help you to know where to start in preparing your content to rank higher and get noticed by Google’s crawlers, let’s consider a few key approaches that you can take:

Longer Content Has More Chance of Getting You Noticed

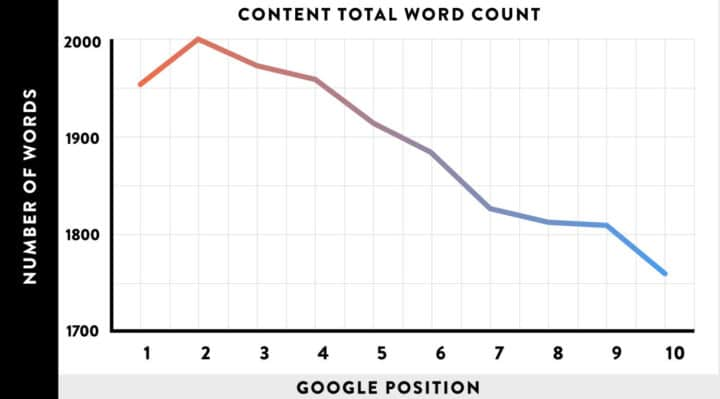

Although Google rarely spoon-feeds what its crawlers are looking for in a good website, we can gain valuable insights by spotting trends. One of these involves lengthy content gaining more traction in terms of SERPs.

As we can see from the data above, the highest placed content on Google ranges towards 2,000 words–while copy that’s shorter in length typically trends lower.

Although Google hasn’t confirmed that it values long-form content higher than its shorter counterparts, we can see from trends that it’s worth expanding existing articles to ensure that they stand a better chance of getting noticed by crawlers.

Conduct an On-Page SEO Audit to Identify Problem Pages

If you’re looking to conduct an effective audit of your on-page SEO and discover your problem pages, it’s worth looking at utilizing a purpose-built on-page SEO checklist. This can help you to analyze your content strategy and make adjustments accordingly.

There are many small changes that can be made to better optimize your content to appease the fickle search engine crawlers out there, and these can involve actions like:

Preparing Title Tags to be Crawled: This tactic is effective because it’s the first thing that both crawlers and users alike will see when discovering your page. To optimize your title tags, remember to keep things relevant to your website’s theme and clearly describe what visitors will get when navigating to your page.

Create Informative Meta Descriptions: Acting as a supporting tool for your title tags, a meta description lets visitors know what your page is about. Here, you can make a strong case as to why your page should be visited over your rival’s.

Again, remember that Google’s crawlers use descriptions as an indicator of what your content is about, so create them with relevancy in mind.

Prioritize Internal Navigation: Crawlers like it when your pages are well connected. Orphaned content can risk falling into the vast abyss of the World Wide Web.

Furthermore, if you can organically link your content back to four or five existing articles on your site, it can help to keep readers engaged in your pages for longer. This also helps Google to see that there are lower bounceback rates on your pages–another factor that can help your SERPs to rise.

Prevent Crawlers From Getting Lost

According to Google’s own data, it can be important to edit your website’s robots.txt file to disallow crawlers from wasting their time on your login pages, contact forms, and shopping carts–essentially pages that exist to provide functionality that crawlers can’t perform.

Because crawlers have been programmed to avoid customer-facing buttons like ‘Add to Cart’ and ‘Contact Us,’ running some quick edits to your website’s robots.txt file can be a great way to ensure that any bot visitors will spend their time crawling the content that they can actually understand and categorize–helping you to rank more accurately for your pages.

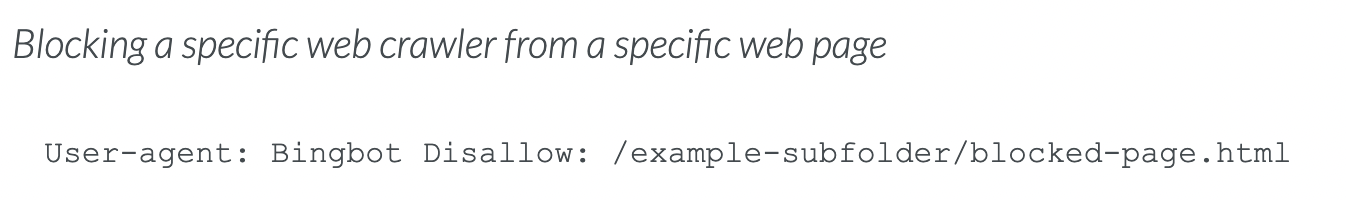

What is a robots.txt file? This is a small text-based file that can be found at the core of your website. Robots.txt files can run rules that block or allow access for all or specific crawlers to access. Unless otherwise specified, all files can be allowed for crawling by search engine bots.

If you don’t have a robots.txt file, it’s possible to use just about any text editor to create one like Notepad and TextEdit. Simply name the file robots.txt and add it to your site under the extension /robots.txt.

The example above from Moz illustrates how syntax can be added to block specific crawlers from wasting time on specific pages, but there are a number of great additions and measures that you can take to optimize your robots.txt file to ensure that crawlers know exactly where they stand with your content.

Optimizing Content Stretches Well Beyond Text

Great SEO never stops at text copy. To effectively optimize your pages, it’s essential that you consider your video content, images, and any other multimedia elements.

Google’s crawlers are smart, but they’re not yet able to understand images in the same way as humans. Because of this, it’s essential that you always remember to add alt tags and descriptions where possible to ensure that search engines and index pages accordingly.

It’s also important to avoid the temptation of copying and pasting images that are already ranking high on Google. However, doing this could only hinder your visibility to traffic because people will still be choosing the image from the same original source as you. To gain an advantage, consider using graphics. Many of them are completely free and can be utilized in a variety of ways by websites.

To help crawlers to see that your website is both relevant and responsive, it’s worth compressing your images with tools like JPEG Optimizer, Optimizilla, or Kraken.io, which will help to lower the size of your chosen pictures and make your website quicker.

Likewise, it’s vital that the same practice is applied to videos. Though it may seem like a lot of hassle to not only create video content but also add more detail to your content, it can make all the world of difference in ranking higher on Google’s results pages.

Furthermore, effective use of image and video content can provide your website with a significant advantage over competitors by helping you to rank higher on Google’s image and video search functions which generally feature far fewer quality results.

Particularly for SMEs, the process of optimizing content for crawlers may seem arduous and time consuming. However, there are certainly great rewards for website owners who dedicate the time to optimize their content. In climbing Google’s SERPs and gaining an edge over your rivals, your on-page SEO measures can help your website to rank higher and receive more traffic.

Read More: How To Rank Your Site Better Using Voice Search Optimization